Cluster Setup¶

My home cluster consists of five Raspberry Pi 5 nodes, each equipped with 16 GB of RAM and 512 GB of storage running Ubuntu 24.10 server. These nodes are named as following:

- Management Node:

Zeus– king and coordinator of the gods - Cluster Node 1:

Apollo– god of knowledge and light - Cluster Node 2:

Artemis– Goddess of the moon, the hunt, and wild nature - Cluster Node 3:

Ares– the brute-force power - Cluster Node 4:

Hermes– fast and efficient messenger

I configured the DHCP on the firewall to assign static IP addresses to all nodes in the cluster. To access these nodes by name, I configured the local DNS server and assigned a unique hostname to each.

| DNS Name | IP Address | Services |

|---|---|---|

zeus.home | 192.168.2.198 | Salt master, InfluxDB, Loki, Grafana, Slurm head node |

apollo.home | 192.168.2.155 | Open WebUI |

artemis.home | 192.168.2.146 | MoviePulse, Video2Audio, DataSpider |

ares.home | 192.168.2.158 | NUT server, Enviro-Watch |

hermes.home | 192.168.2.177 | WordPress, DDNS client (inadyn), Uptime monitor |

pihole.home (rp4) | 192.168.2.181 | Pi-hole service, NTP server, SkyWatch |

Configuration Managers¶

Configuration management tools are used to automate the deployment, configuration, and maintenance of systems and applications across nodes. They allow administrators to manage configurations declaratively and apply them repeatedly without manual intervention. Different configuration managers have been developed over the years such as: CFEngine, Puppet, Chef, SaltStack, Ansible. Each tool has its own strengths, philosophies, and ecosystems.

In my home lab, I manage a cluster of Raspberry Pi 5 nodes, and for this setup, I chose SaltStack as my configuration management solution. Here's why:

Agent-based with ZeroMQ for Speed and Scale: SaltStack uses a fast, event-driven architecture powered by ZeroMQ. Its agent-based design (using salt-minion) allows me to manage nodes asynchronously, and its performance scales well — even on resource-constrained devices like Raspberry Pi.

YAML + Jinja Syntax for Configuration: Salt's configuration system is built on YAML and Jinja, which makes it both readable and programmable. This allows me to create DRY, templated configurations that are easy to maintain and reuse across different nodes and environments.

Built-in Remote Execution: Beyond just state application, SaltStack includes a powerful remote execution system — letting me run ad-hoc commands across the cluster for monitoring, diagnostics, or quick fixes.

Active Community and Documentation: SaltStack maintains a strong and active community, and its documentation is mature and practical — especially when managing Debian/Ubuntu-based systems like Raspberry Pi OS.

SaltStack Setup¶

The SaltStack master is installed on the management node (Zeus) while the Salt minions are deployed on all other nodes in the cluster. From Zeus I can ping all minions:

$ sudo salt '*' test.ping

artemis:

True

ares:

True

hermes:

True

apollo:

True

theater:

True

pihole:

True

children:

TrueI have defined two node groups to better reflect the logical grouping of the Raspberry Pi nodes:

nodegroups:

core: 'L@apollo,artemis,ares,hermes'

infra: 'L@pihole,theater,children'Now I can target a specific group:

$ sudo salt -N core test.ping

hermes:

True

artemis:

True

apollo:

True

ares:

TrueOne of the features I appreciate most about SaltStack is the ability to execute commands simultaneously across all nodes. For example, to get the uptime across all nodes:

$ sudo salt '*' cmd.run 'uptime'

ares:

05:14:16 up 21:12, 0 user, load average: 0.00, 0.00, 0.00

artemis:

05:14:16 up 21:13, 0 user, load average: 0.00, 0.00, 0.00

hermes:

05:14:16 up 4:37, 1 user, load average: 0.00, 0.00, 0.00

apollo:

05:14:16 up 21:13, 0 user, load average: 0.01, 0.01, 0.00

theater:

22:14:16 up 1 day, 7:20, 1 user, load average: 0.00, 0.02, 0.00

pihole:

05:14:16 up 4:07, 0 user, load average: 0.08, 0.02, 0.01

children:

22:14:16 up 1 day, 6:09, 1 user, load average: 0.74, 0.27, 0.19Getting the Python version installed in each node:

$ sudo salt '*' cmd.run 'python3 --version'

hermes:

Python 3.12.3

ares:

Python 3.12.3

artemis:

Python 3.12.3

apollo:

Python 3.12.7

theater:

Python 3.12.3

pihole:

Python 3.12.3

children:

Python 3.12.3Check for CPU throttling status on all Raspberry Pi node, which can indicate issues like undervoltage, overheating, or frequency capping:

$ sudo salt '*' cmd.run 'vcgencmd get_throttled'

artemis:

throttled=0x0

ares:

throttled=0x0

hermes:

throttled=0x0

apollo:

throttled=0x0

theater:

throttled=0x0

pihole:

throttled=0x0

children:

throttled=0xe0000To update and upgrade system packages:

$ sudo salt '*' pkg.refresh_db

$ sudo salt '*' pkg.upgradeInstalling packages on nodes:

$ sudo salt '*' pkg.install htop tmuxInstalling docker on a group of nodes:

$ sudo salt -N core cmd.script https://get.docker.com

$ sudo salt -N core cmd.run 'usermod -aG docker admin'

$ sudo salt -N core cmd.run 'docker --version'To reboot or shutdown specific nodes:

$ sudo salt '<minion_id>' system.reboot

$ sudo salt '<minion_id>' system.shutdownShared Folder¶

Creating a shared folder accessible by all nodes provides a centralized location for storing and accessing common data, configurations, logs, and binaries. It simplifies synchronization and coordination across the Raspberry Pi cluster.

I configured the shared folder on my Synology NAS to centralize storage for the cluster. By hosting it on the NAS, I can leverage the same snapshot and external backup workflows used for the rest of my data, ensuring consistent and reliable backups.

To enhance security, I created a dedicated user account on the NAS with access restricted exclusively to this shared folder. All nodes in the cluster automatically mount the share folder at boot using CIFS.

//192.168.2.161/rp_cluster /opt/rp_cluster cifs credentials=/path/to/credentials,uid=1000,gid=1000,defaults,noatime,nofail,_netdev,x-systemd.automount 0 0Options are:

uid=1000: Sets the user ID (owner) of the mounted files to user ID 1000 (usually the first regular user on Ubuntu).gid=1000: Sets the group ID of the mounted files to group ID 1000, typically matching the primary group of the same user.defaults: Applies the default mount options: rw, suid, dev, exec, auto, nouser, and async.noatime: Disables updates to file access time on read. Improves performance, especially on flash storage, by reducing unnecessary disk writes.nofail: Ensures that the system continues booting even if the mount fails, preventing boot failures when the network share is unavailable._netdev: Informs the system that the mount is a network device, and should only be mounted after the network is up.x-systemd.automount: Tells systemd to mount this share on first access instead of at boot time. Helps prevent mount failures caused by slow or delayed network availability.

Testing the write speed:

$ dd if=/dev/zero of=/opt/rp_cluster/testfile bs=1M count=1024 conv=fdatasync

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB, 1.0 GiB) copied, 9.45947 s, 114 MB/sTesting the read speed:

$ dd if=/opt/rp_cluster/testfile of=/dev/null bs=1M count=1024

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB, 1.0 GiB) copied, 9.12901 s, 118 MB/sThese results indicate that both the write and read speeds surpass those typically achieved with standard MicroSD cards.

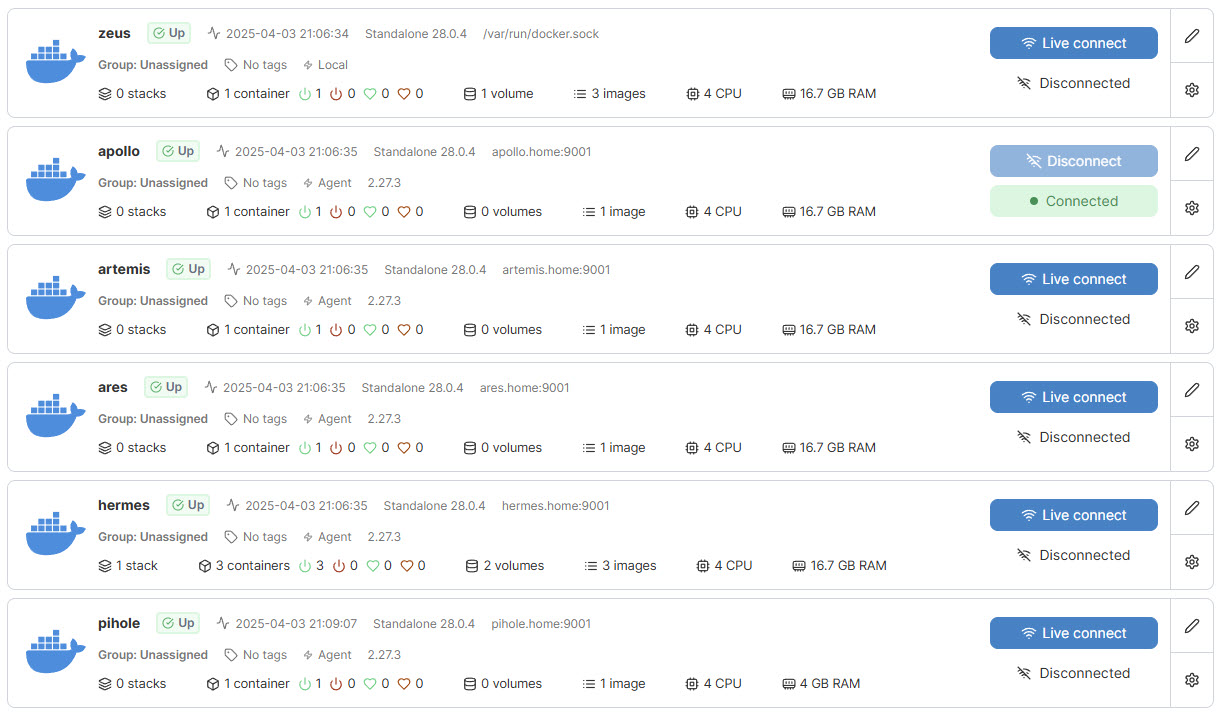

Container Management Dashboard¶

In my home lab environment, I utilize Docker containers as the primary deployment technology, orchestrating them across multiple nodes within my Raspberry Pi cluster.

To efficiently manage these distributed containers, a container management dashboard is very helpful. Container management dashboards are graphical interfaces that simplify the management of containerized applications like Docker and Kubernetes. They provide users with visual tools to handle containers, images, networks, volumes, and clusters without needing to rely solely on command-line operations.

I am using Portainer which is an open-source, lightweight container management platform. Portainer simplifies the deployment, monitoring, and maintenance of containerized applications across various nodes. It offers centralized access control and real-time visibility into the cluster's status. I installed Portainer on my management node, Zeus. Portainer automatically connects to the local Docker engine or Kubernetes cluster.

Portainer can manage multiple environments using agents. You can install Portainer Agent on each other nodes. The main Portainer server connects to each node via the agent.

Remote Development¶

Remote development refers to the practice of writing, testing, and debugging code on a local machine while executing it on a remote system. This approach is particularly beneficial when developing for hardware like Raspberry Pi devices. It allows developers to leverage the computational resources and familiar development environment of their primary computers.

Various IDEs offer robust support for remote development. For instance, Visual Studio Code (VS Code) provides the Remote - SSH extension, while JetBrains offers comprehensive Remote Development solutions. In my development workflow, I utilize VS Code's remote development capabilities.

To enhance the efficiency of my remote development workflow, I have:

Enabled Passwordless SSH Authentication: I have configured passwordless SSH authentication from my desktop PC to all Raspberry Pi nodes. This setup ensures that VS Code can establish connections without prompting for passwords, thereby facilitating a more efficient development environment.

Defined Host Aliases in SSH Configuration: I configured the

~/.ssh/configfile to include host aliases, allowing for simplified and more efficient SSH connections. This setup enables me to connect to remote servers using short, memorable aliases instead of full hostnames.Host zeus Hostname zeus.home User adminVS code remote development extension can read the ssh configuration and list the devices.

Installed Extensions: VS Code offers a rich ecosystem of extensions that significantly enhance its functionality for developers across all domains. Through the Extensions Marketplace, users can install tools for language support, code formatting, linters, debugging, container integration, Git tools, Jupyter notebooks, and more. To accommodate diverse project requirements, I have installed pertinent extensions such as C/C++, Python, Golang, Git, etc.